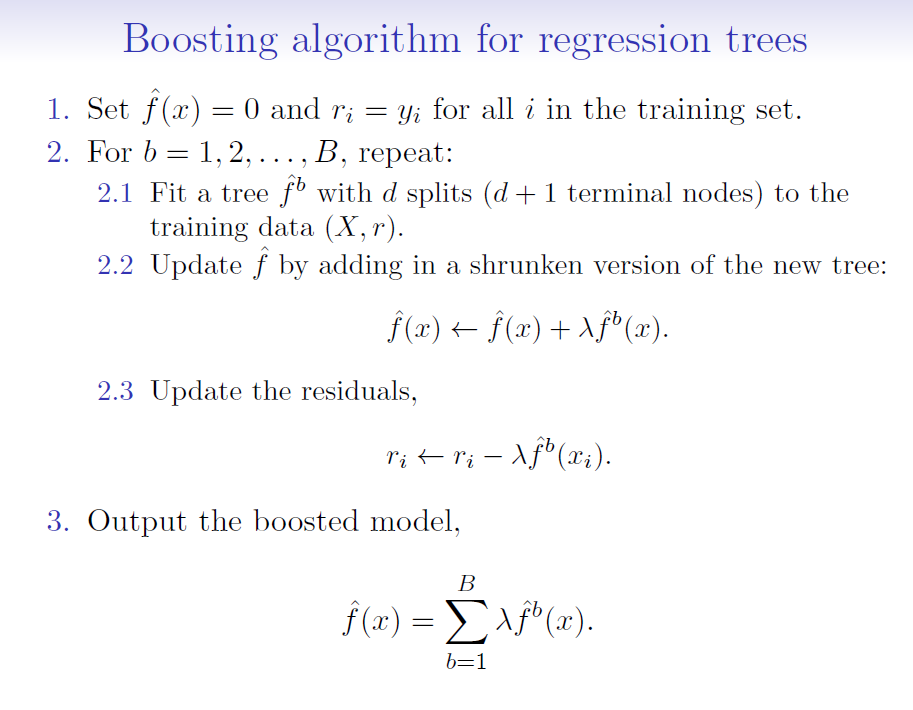

8.23 Boosting algorithm

where:

\(\hat{f}(x)\) is the decision tree (model)

\(r\) = residuals

\(d\) = number of splits in each tree (controls the complexity of the boosted ensemble)

\(\lambda\) = shrinkage parameter (a small positive number that controls the rate at which boosting learns; typically 0.01 or 0.001 but right choice can depend on the problem)

Each of the trees can be small, with just a few terminal nodes (determined by \(d\))

By fitting small trees to the residuals, we slowly improve our model (\(\hat{f}\)) in areas where it doesn’t perform well

The shrinkage parameter \(\lambda\) slows the process down further, allowing more and different shaped trees to ‘attack’ the residuals

Unlike bagging and random forests, boosting can OVERFIT if \(B\) is too large. \(B\) is selected via cross-validation