12.5 Can we make our model too good?

Overfitting is always a concern as we start to tune hyperparameters.

tip from the book: Using out of sample data is the solution for detecting when a model is overemphasizing the training set

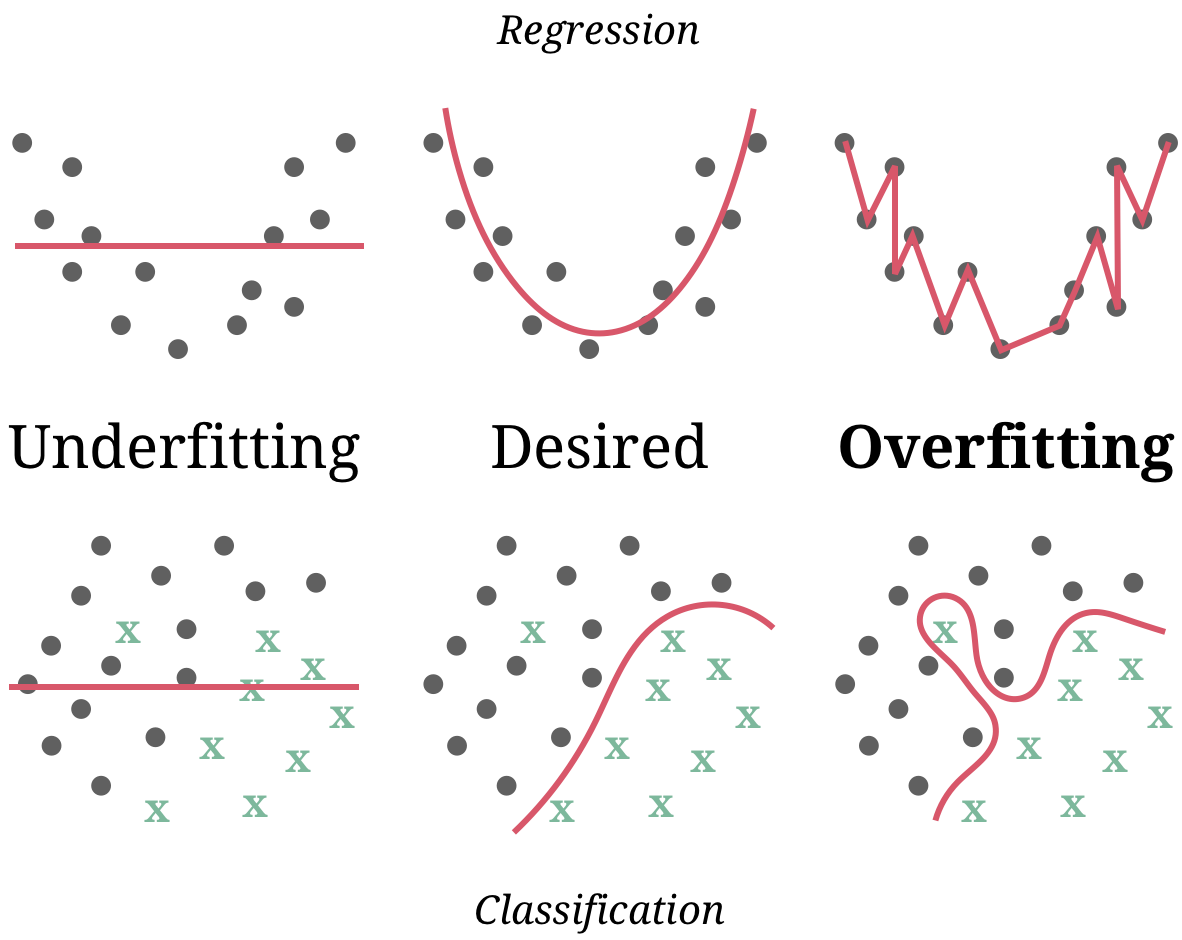

graphs depicting what overfitting looks like

Image Credit (https://therbootcamp.github.io/ML_2019Oct/_sessions/Recap/Recap.html#8)