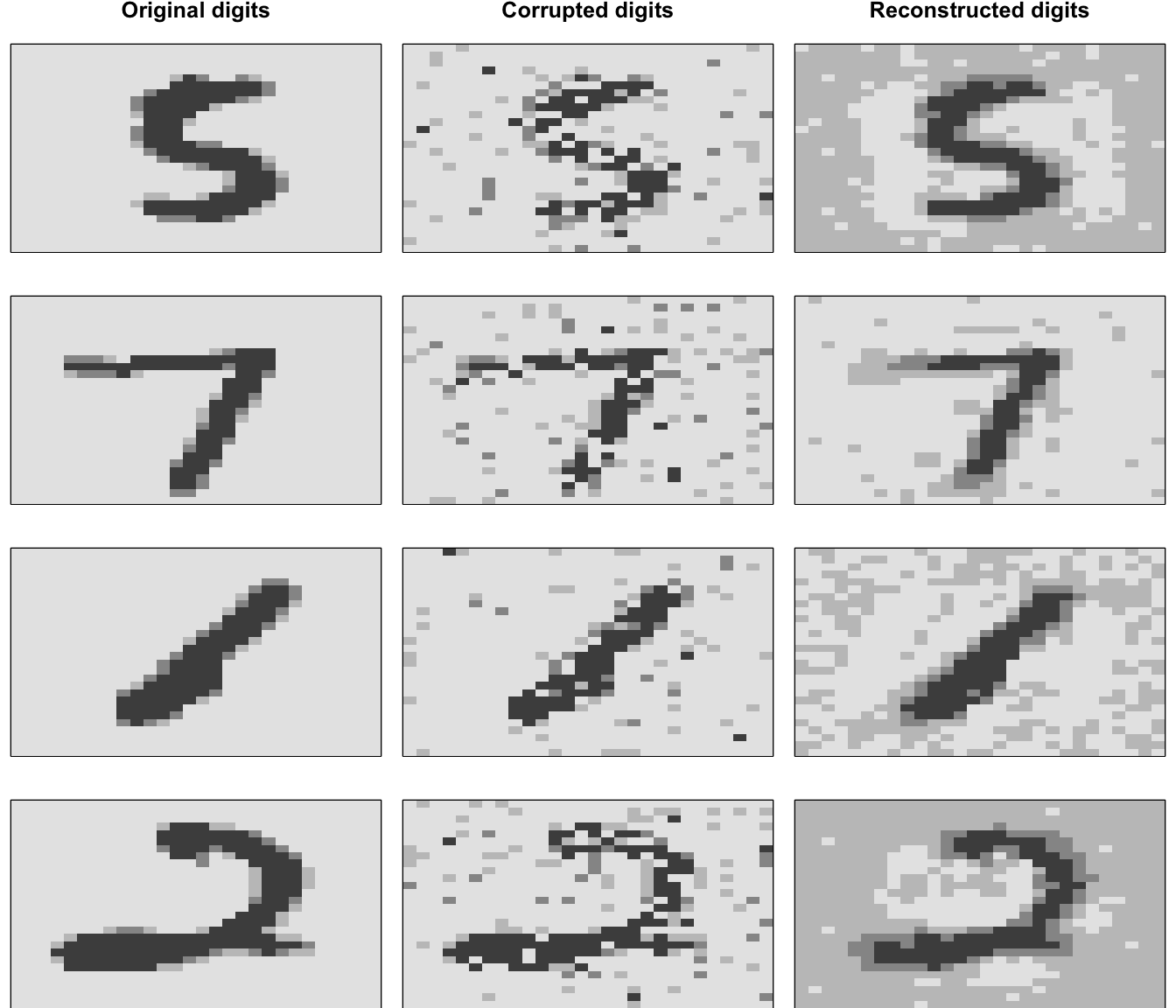

19.5 Denoising autoencoders

We train the autoencoder to reconstruct the original input from a corrupted copy of it, which forces the codings to learn more robust features even when the number of codings is greater than the number of inputs.

In this case:

- The encoder function should preserve the essential signals.

- The decoder function tries to undo the effects of a corruption.

19.5.1 Corruption process

- Randomly set some of the inputs (as many as half of them) to zero or one.

- For continuous-valued inputs, we can add Gaussian noise.

19.5.2 Coding example

- Train the model.

denoise_ae <- h2o.deeplearning(

x = seq_along(features),

# Currupted features

training_frame = inputs_currupted_gaussian,

# Original features

validation_frame = features,

autoencoder = TRUE,

hidden = 100,

activation = 'Tanh',

sparse = TRUE

)- Evaluating the model.

# Print performance

h2o.performance(denoise_ae, valid = TRUE)

## H2OAutoEncoderMetrics: deeplearning

## ** Reported on validation data. **

##

## Validation Set Metrics:

## =====================

##

## MSE: (Extract with `h2o.mse`) 0.02048465

## RMSE: (Extract with `h2o.rmse`) 0.1431246