6.2 Expanding Numeric Transformations

Just like we can expand qualitative features into multiple useful columns, we can also take one numeric predictor and expand it into several more. The following subsection divides these expansions into two categories: nonlinear features via basis expansions and splines, and discretize predictors

6.2.1 Nonlinear Features via Basis Expansions and Splines

6.2.1.1 Basis Expansions

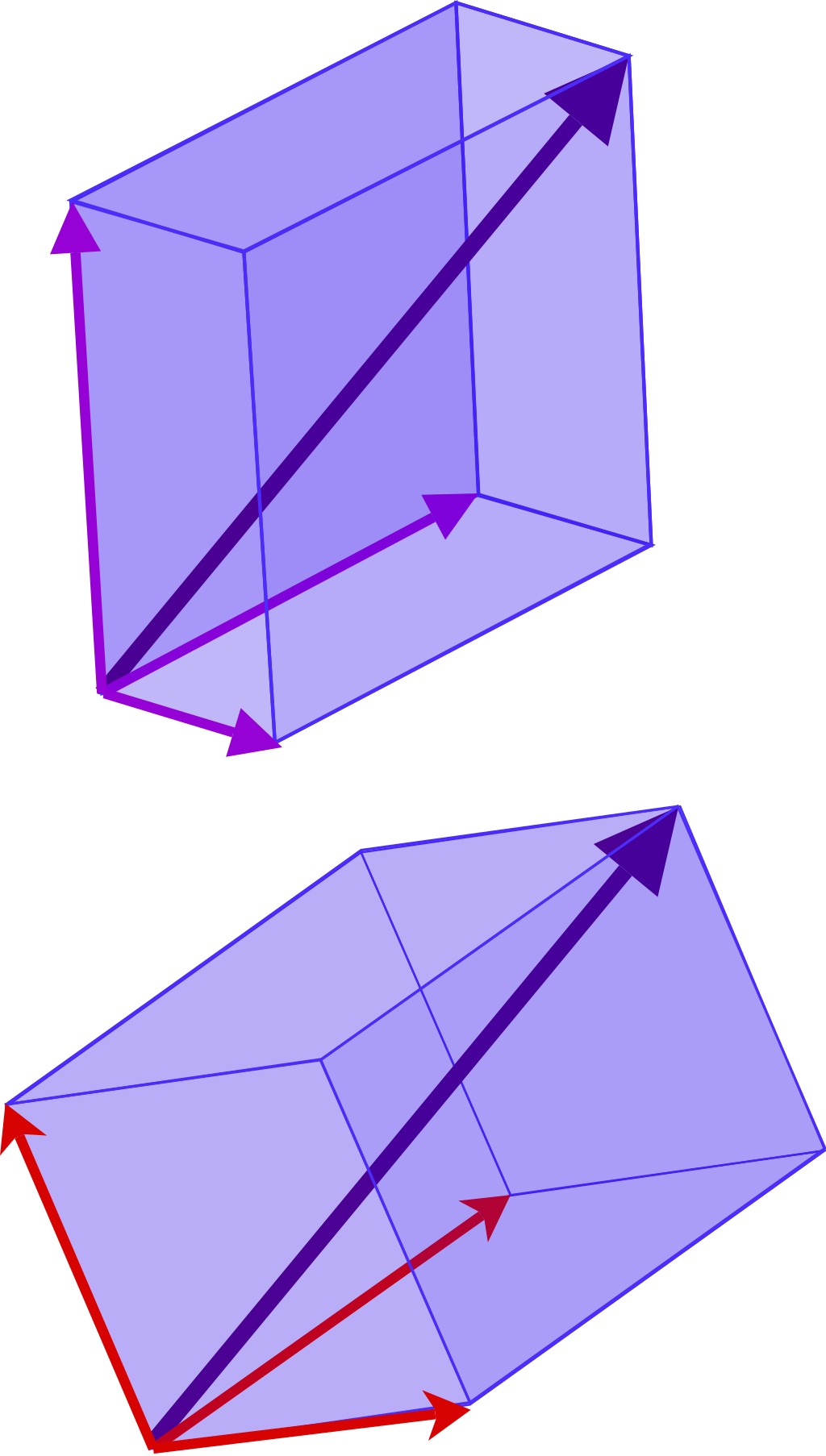

REMINDER: A basis is a set of vectors V such that every vector V can be written uniquely as a finite combination of vectors of the basis.

A basis expansion takes a feature \(x\) and derives a set of functions \(f_i(x)\) that can be combined using a linear combination, sort of like polynomial contrasts in the previous categorical section. A cubic basis expansion would produce two additional features, a squared and a cubed version of the original. \(\beta\) terms are computed for each feature using linear regression.

But what if these trends are not linear throughout the prediction space?

The sale price and lot area data for the training set.

6.2.1.2 Polynomial Splines

Polynomial splines can be used for basis expansion instead of typical linear regression to divide the prediction space into regions, whose boundaries are called knots. Each region can have its own fitted set of polynomial functions, which can describe complex relationships.

Usually you select percentiles to create the knots. Determining the number of knots can be done by doing a grid search, a visual inspection of the results, generalized cross-validation (GCV) which is used in some spline functions.

The previous methods were created prior to being exposed to the outcome variable, but that doesn’t have to be the case. Generalized Additive Models (GAMS) work very similarly to polynomial splines, and can adaptively model multiple different basis functions for each predictor. Loess regression is also a supervised nonlinear smoother.

(a) Features created using a natural spline basis function for the lot area predictor. The blue lines correspond to the knots. (b) The fit after the regression model estimated the model coefficients for each feature.

Lastly, a multivariate adaptive regression spline (MARS) model includes a single knot, or hinge transformation.

(a) Features created using a natural spline basis function for the lot area predictor. The blue lines correspond to the knots. (b) The fit after the regression model estimated the model coefficients for each feature.

6.2.2 Discretize Predictors as a Last Resort

If we discretize a predictor, or turn it into categorical, we are essentially binning the data. Unsupervised approaches to binning are rather straight forward using percentiles or the median, but they can also be set based on specific cut-off points to improve performance.

The books mentions many reason why this should NOT be done. Despite simplifying modeling, you lose much of the nuance. Discretizing your predictors can make it harder to model trends, it can increase the probability of modeling a relationship that is not there, and the cut-off points are rather arbitrary.

Raw data (a) and binned (b) simulation data. Also, the results of a simulation (c) where the predictor is noninformative.