10.3 Classes

Feature selection methodologies:

- intrinsic (implicit): (feature selection is incorporated in the model) use a greedy approach that makes a locally optimal choice, identifying a narrow set of predictors. Model examples:

- tree-and-rule-based models- splits

- MARS (multivariateadaptive regression spline)- create new features

- regularization models- i.e. lasso use penalties (shrinks the predictor coeffs to abs zero)

- filter: initial supervised analysis and selection (example: 5.6 ) uses a odds-ratio cut-off as a selector of statistical significance). It is effective a capturing large trends. (more in chapter 11)

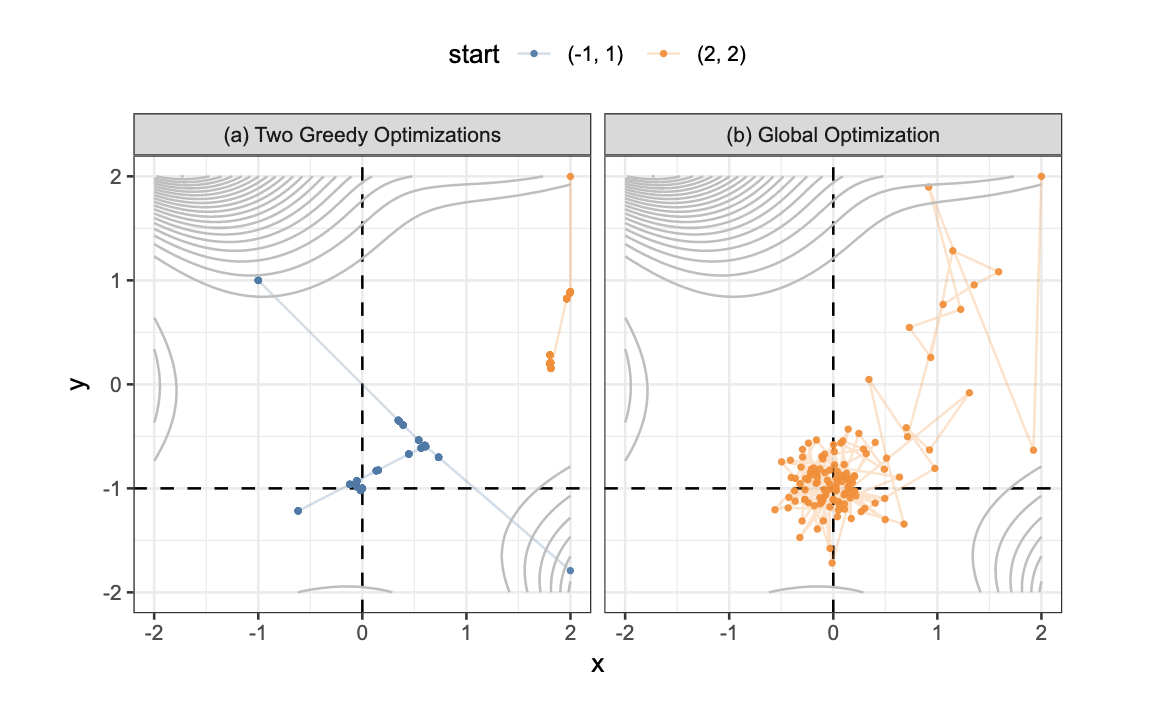

- wrapper: iterative search for adding predictors to the model based on the model performance results. It can be greedy(backwards selection-RFE recursive feature elimination) or non-greedy. In particular, a non-greedy approach would re-evaluate combinations (GA genetic algorithms and SA simulated annealing). It incorporates randomness.

Figure 10.1: Examples of greedy and global search methods when solving functions with continuous inputs.

- If non-linear intrinsic methods has good performance, could proceed to a wrapper method combined with a non-linear model.

- If linear intrinsic methods has good performance, could proceed to a wrapper method combined with a linear model.