52. VQ-VAE

Learning objectives

- Introduce VQ-VAE

Variational Auto-Encoders

Variational Auto-Encoders

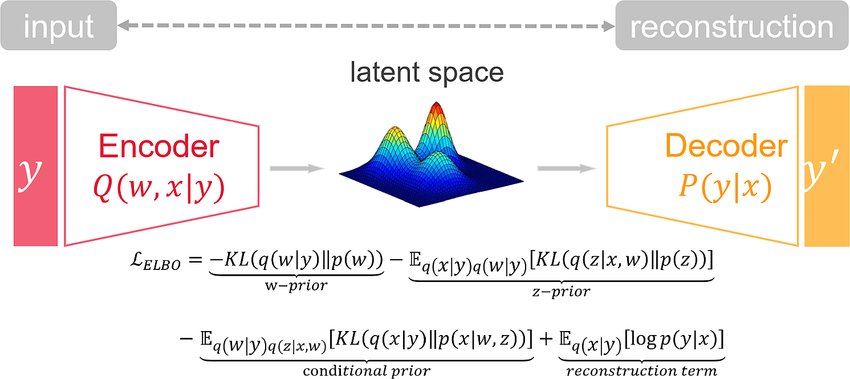

In AI architecture, variational autoencoders simulate the latent space (between the encoder and decoder)—usually with a mixture of Gaussian functions—to maximize the ELBO (evidence lower bound)

VAE Gaussians

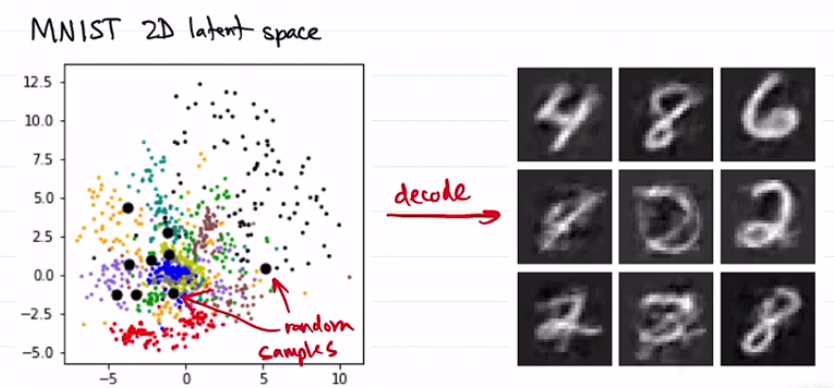

without

without VAE

- image source: Jeff Orchard

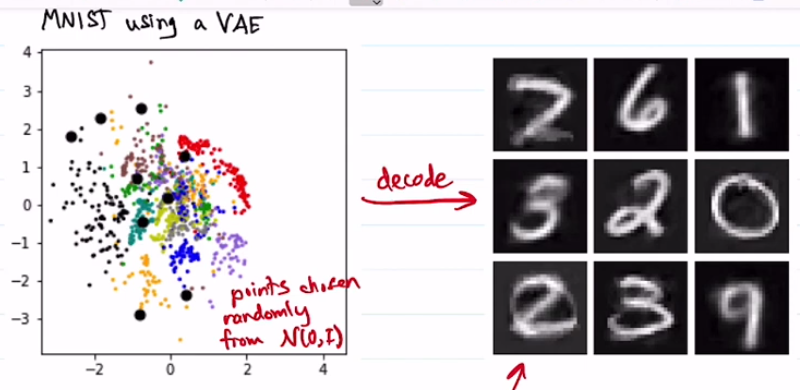

with

with VAE

- image source: Jeff Orchard

VQ-VAE

VQ-VAE

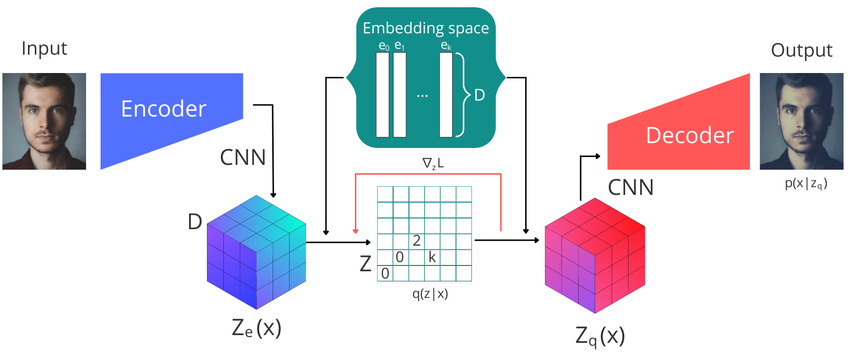

Vector quantized variational autoencoders (VQ-VAE) utilize an discrete embedding space

- for example: 32x32 embedding space of vectors

VQ-VAE