28. Vector Databases and Reranking

Learning objectives

- What are vector databases?

- The basis of the Reranking model

Vector databases

From A fun and absurd introduction to Vector Databases by Alexander Chatzizacharias

Vector databases

Vector databases facilitate semantic search on your data.

- Embedding models return vectors and LLM understand context from those vectors.

- Used for Retrieval-Augmented Generation systems to query documents.

Vector database

Indexes and distances

Distance (far) or similarity (close):

Euclidean distance

Cosine similarity

Hamming

Manhattan

Indexing algorithms:

Exact nearest neighbor: linear search, k-nearest neighbors, space partitioning.

Approximate nearest neighbor:

- IVFFFlat Inverted file with flat compression.

- Locality-sensitive hashing (LSH)

- Approximate Nearest Neighbors Oh yeah (ANNOY)

- Hierarchical Navigable Small World (HNSW)

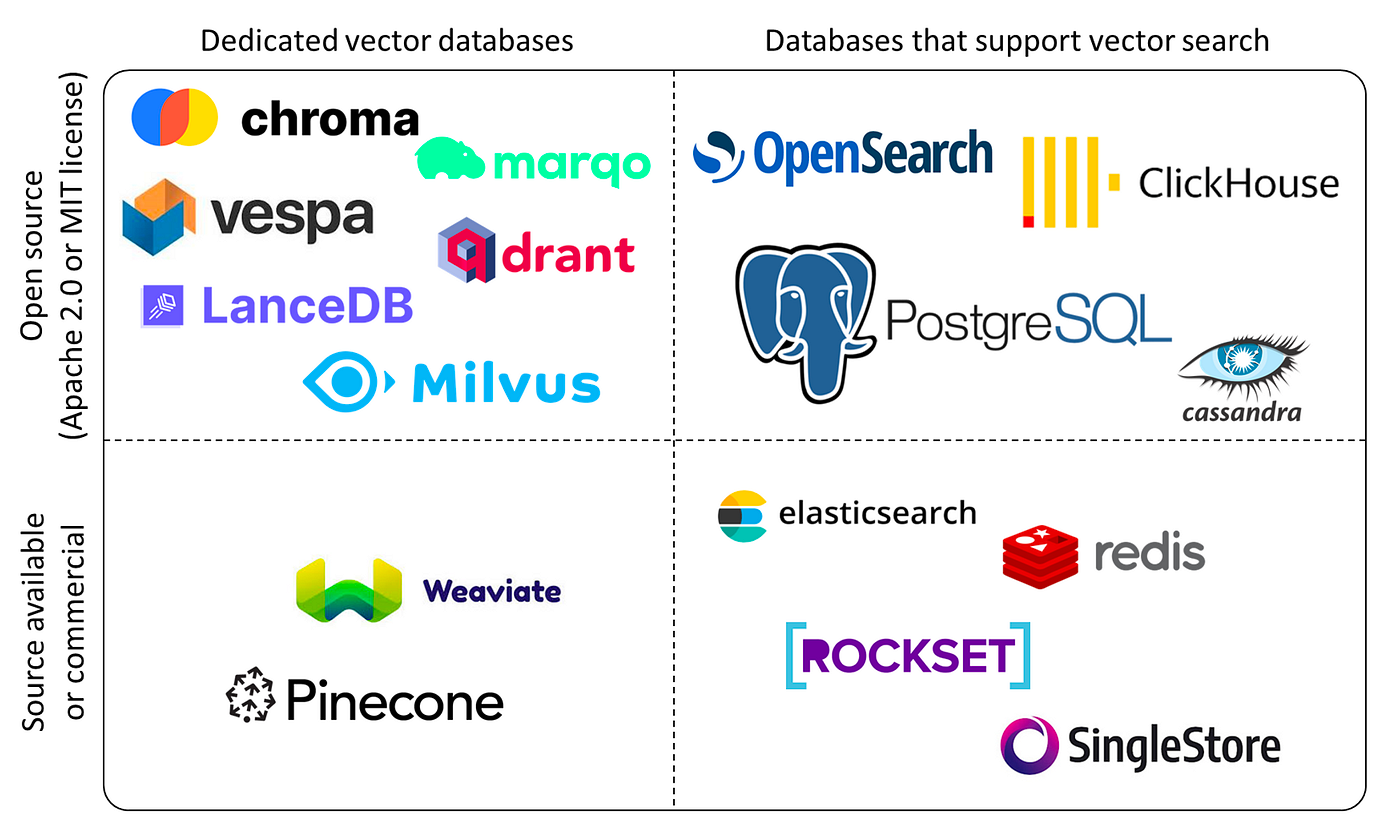

Some popular vector databases

Vector databases

Reranking

From Rerankers and Two-Stage Retrieval by Picone

Retrieval Augmented Generation (RAG) is more than putting documents into a vector DB and adding an LLM on top.

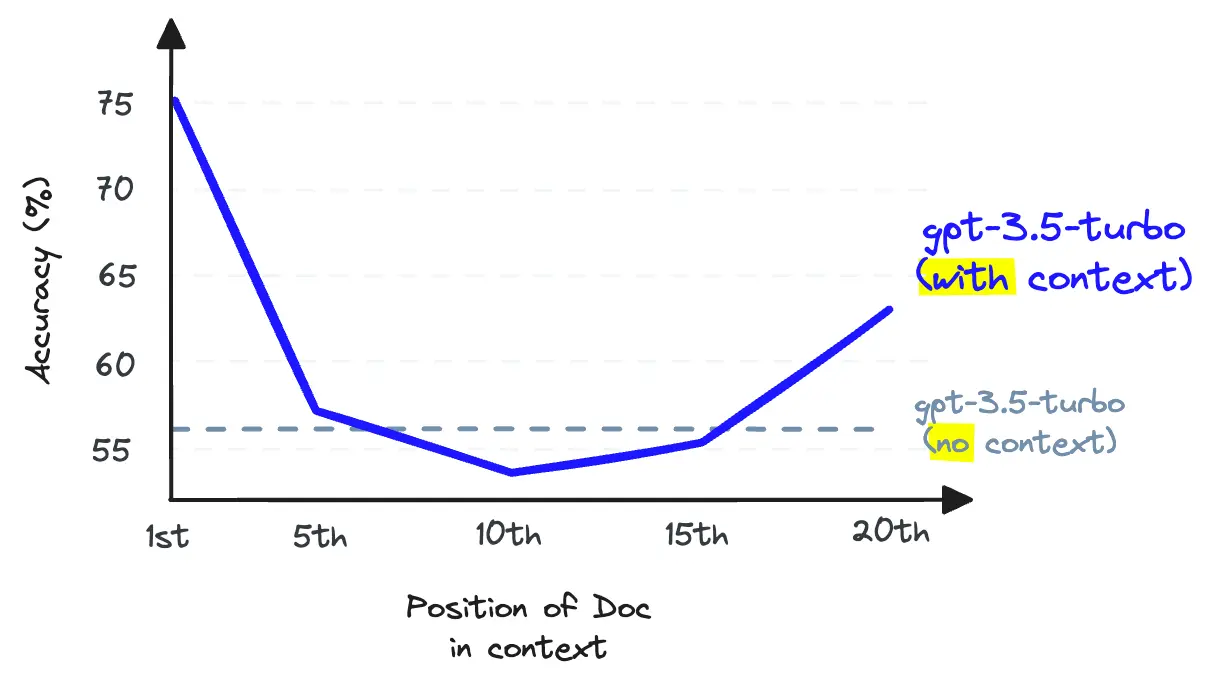

- Recall: how many of the relevant documents are we retrieving.

- LLM recall : the ability of an LLM to find information from the text placed within its context window (its RAM).

- More tokens in the context window, less LLM recall.

Recall and context window

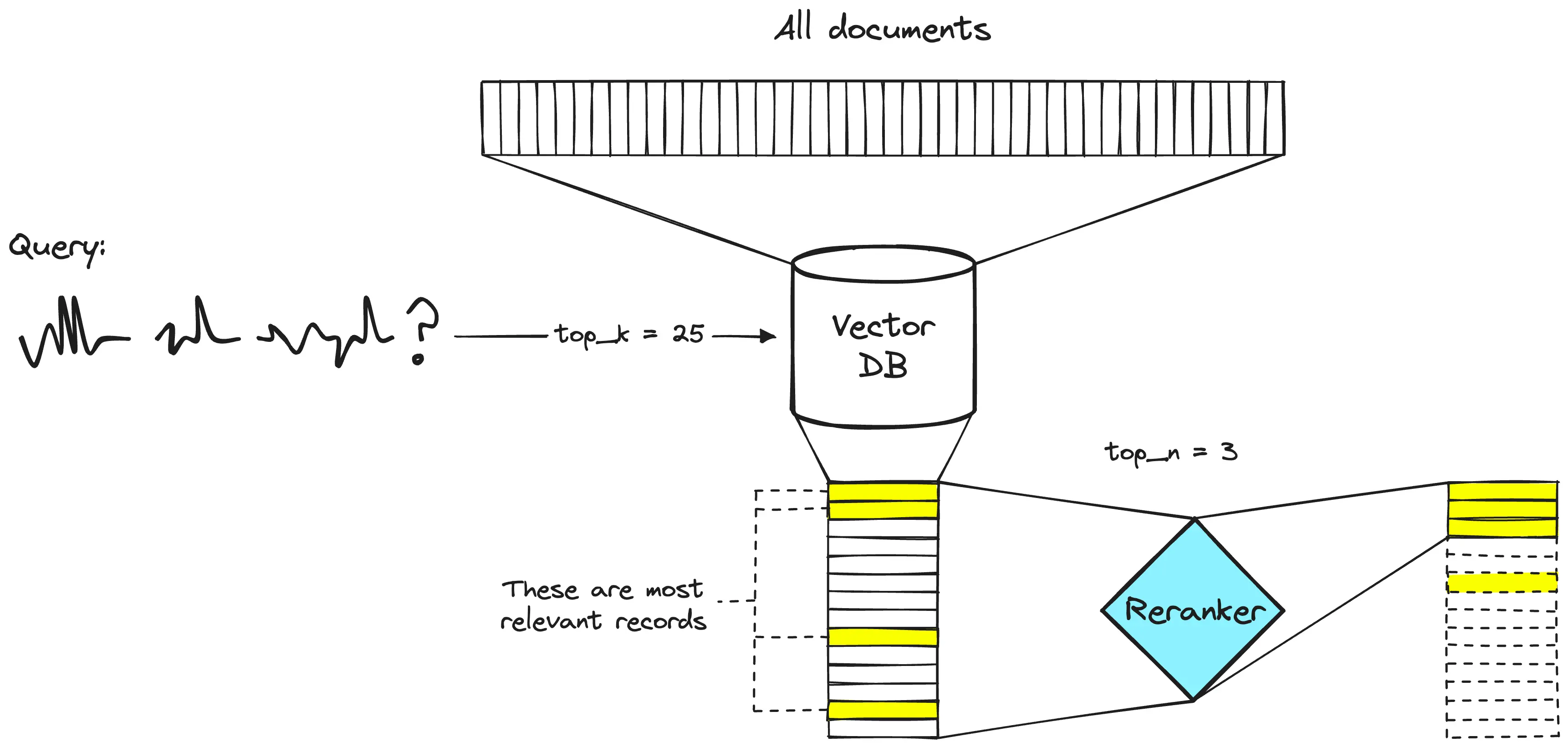

Maximize retrieval recall by retrieving plenty of documents and then maximize LLM recall by minimizing the number of documents that make it to the LLM

Solution: Reranker model

Reranker

Reranker model

Given a query and document pair, the reranker reorders the documents by relevance to our query using a similarity score.

Two stages:

- Embedding model/retriever: Fast.

- Retrieves a set of relevant documents from a larger datase

- Reranker: Slow.

- Reranks the documents retrieved by the first-stage model.