24. Distillation and Merging

Distillation

Learning objectives

- Finish module on fine tuning

- Learn to train a “student” with a “teacher”

Sources

Following the Gen AI Handbook, we looked at

Math Updates

Temperature

Another hyperparameter is the softmax temperature

\[p_{i} = \frac{exp(z_{i}/T)}{\sum_{j} exp(z_{j}/T)}\]

- \(T \rightarrow 0\): one-hot target vector

- \(T \rightarrow \infty\): uniform distribution (random guessing)

KL Loss

Kullback-Leibler loss is defined as

\[\begin{array}{rcl} KL(p||q) & = & \text{E}_{p}[\log \frac{p}{q}] \\ ~ & = & \displaystyle\sum_{i} p_{i} \cdot \log(p_{i}) - \sum_{i} p_{i} \cdot \log(q_{i}) \end{array}\]

BERT

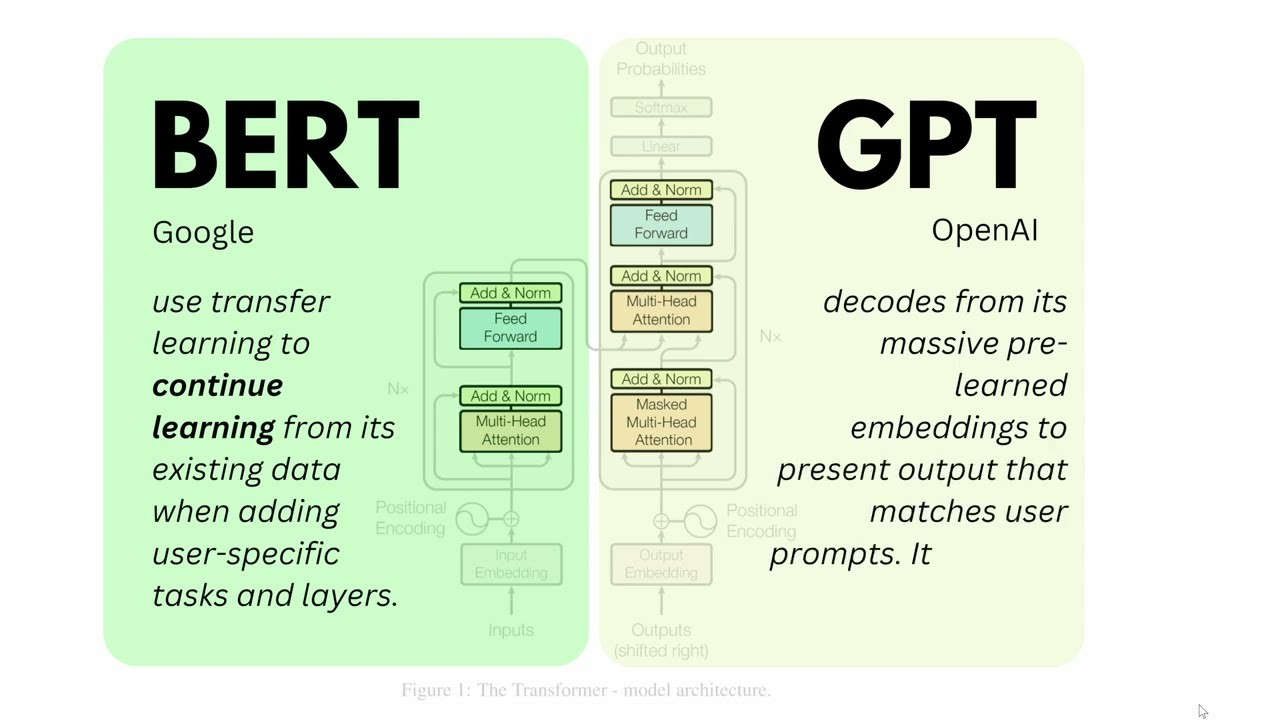

Transformers

- Encoder-only: BERT

bidirectional encoder representations from transformers

- Decoder-only: GPT

generative pre-trained transformer

Encoders vs Decoders

BERT vs GPT

- image source: Ronak Verma

Applications

BERT

- text classification

- data labeling

- recommender

- sentiment analysis

GPT

- content generation

- conversational chatbots

Distillation

Fine Tuning

distillation motivation

- image source: Google Research

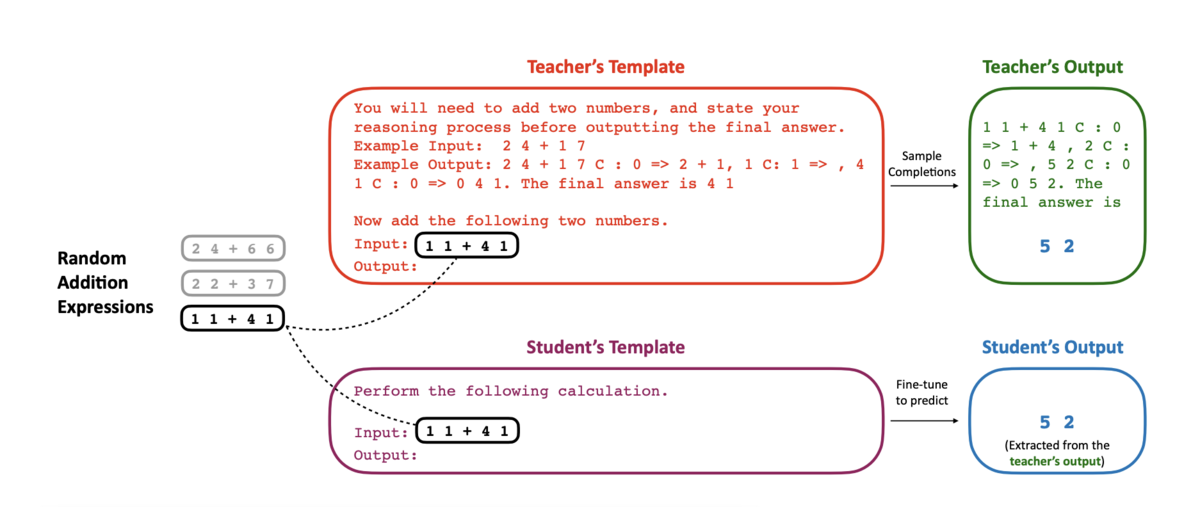

Teacher and Student

teacher and student

- image source: Snorkel AI

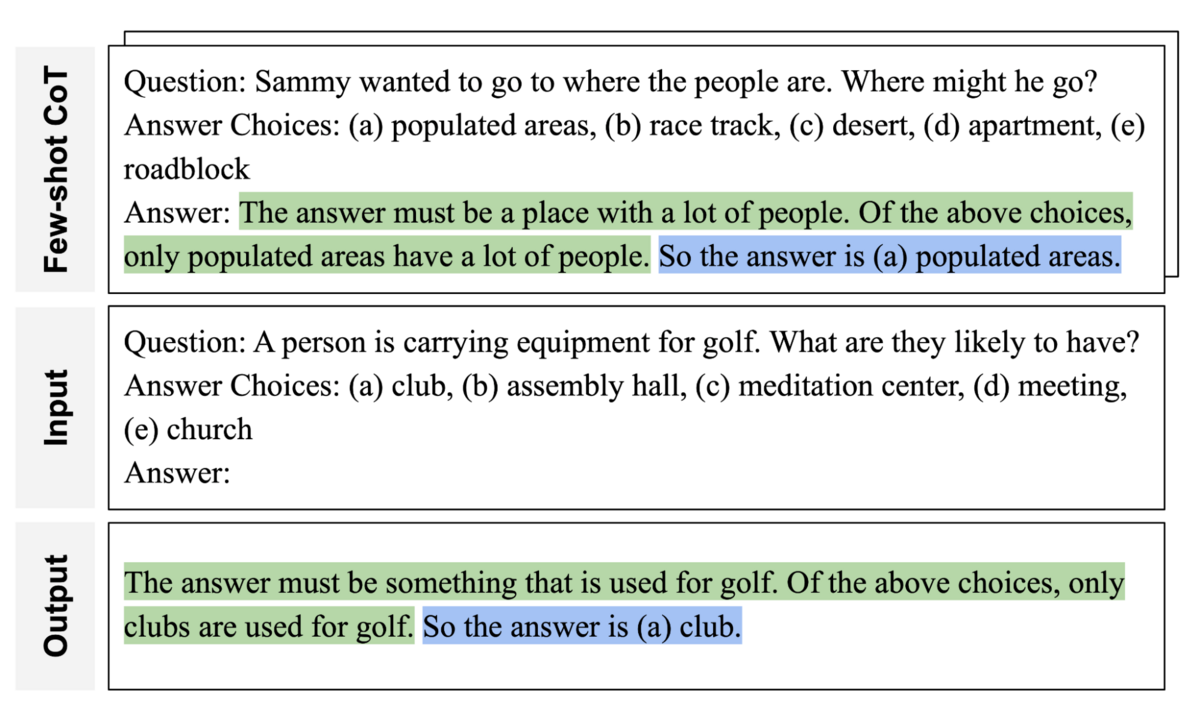

Chain of Thought

chain of thought

- image source: Snorkel AI

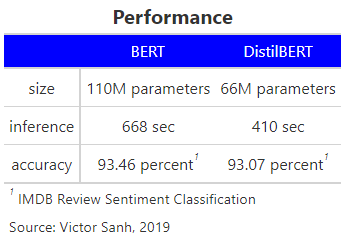

DistilBERT

DistilBERT Performance

DistilBERT performance