23. Context Scaling

Context Scaling

Learning objectives

- Adapt to long context inputs

- Review RoPE

Sources

Following the Gen AI Handbook, we looked at

The Problem

Issues with Long Contexts

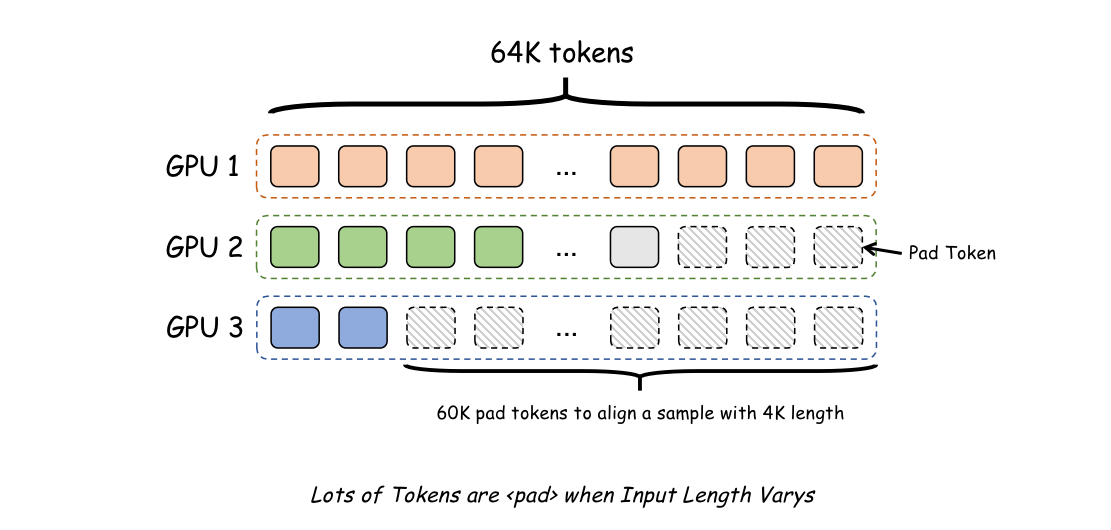

batch alignment

- memory usage

- attention space: \(O(N^{2})\)

Fixes

- Grouped Query Attention (GQA): multiple query matrices, but shares keys and values

- Gradient Checkpoint: only saves results only after \(\sqrt{N}\) layers

- LoRA

- Distributed Training

- Sample packing: sequences share long chains

- Flash Attention: \(O(N)\)

Rotary Position Embedding

RoPE

RoPE matrix

- PE vectors maintain magnitude

- resilient with test data \(>\) training data

- image credit: Eleuther AI

Experiments

Gradient

- H. Liu et al.: “1M-32K, 10M-131k, 10M-262k, 25M-524k, 50M-1048k (theta-context length) schedule”

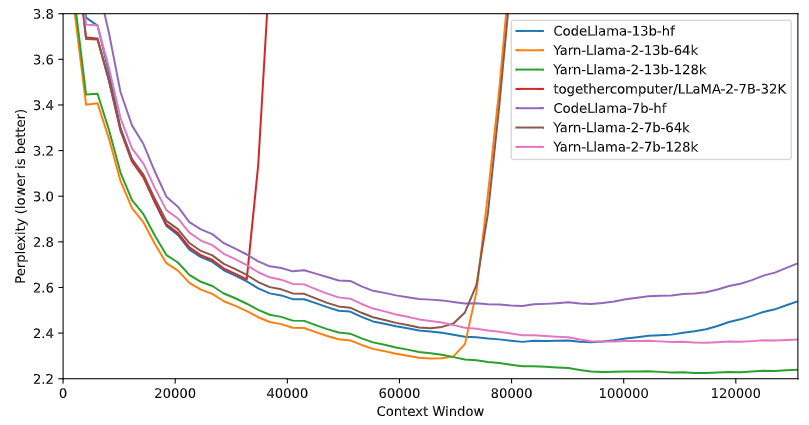

Llama with YaRN

Llama with Yarn

- image credit: Eleuther AI