21. Reward Models and RLHF

RLHF

Learning objectives

- Continue discussing fine tuning

- Motivate reward models

- Elaborate on RLHF’s role in AI history

Sources

Following the Gen AI Handbook, we looked at

Fine Tuning

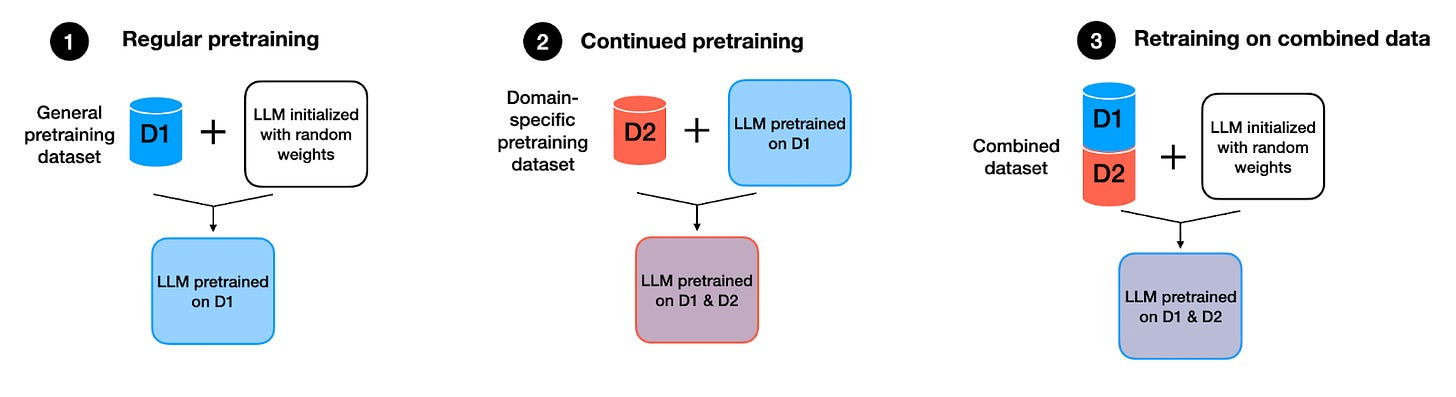

Continued Pre-Training

continued pre-training

- image source: Sebastian Raschka

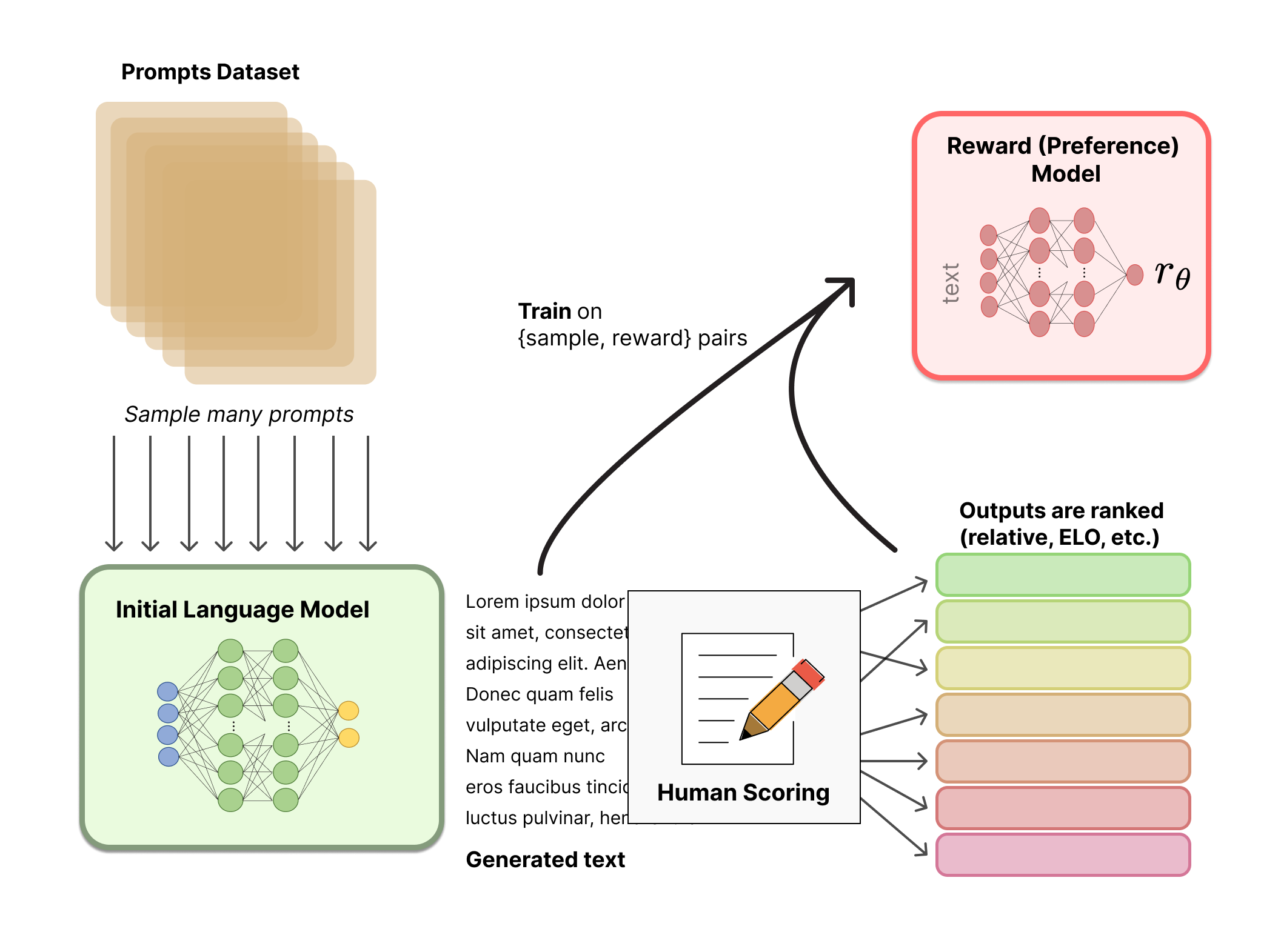

Reward Models

RL

reinforcement learning

- image source: Hugging Face

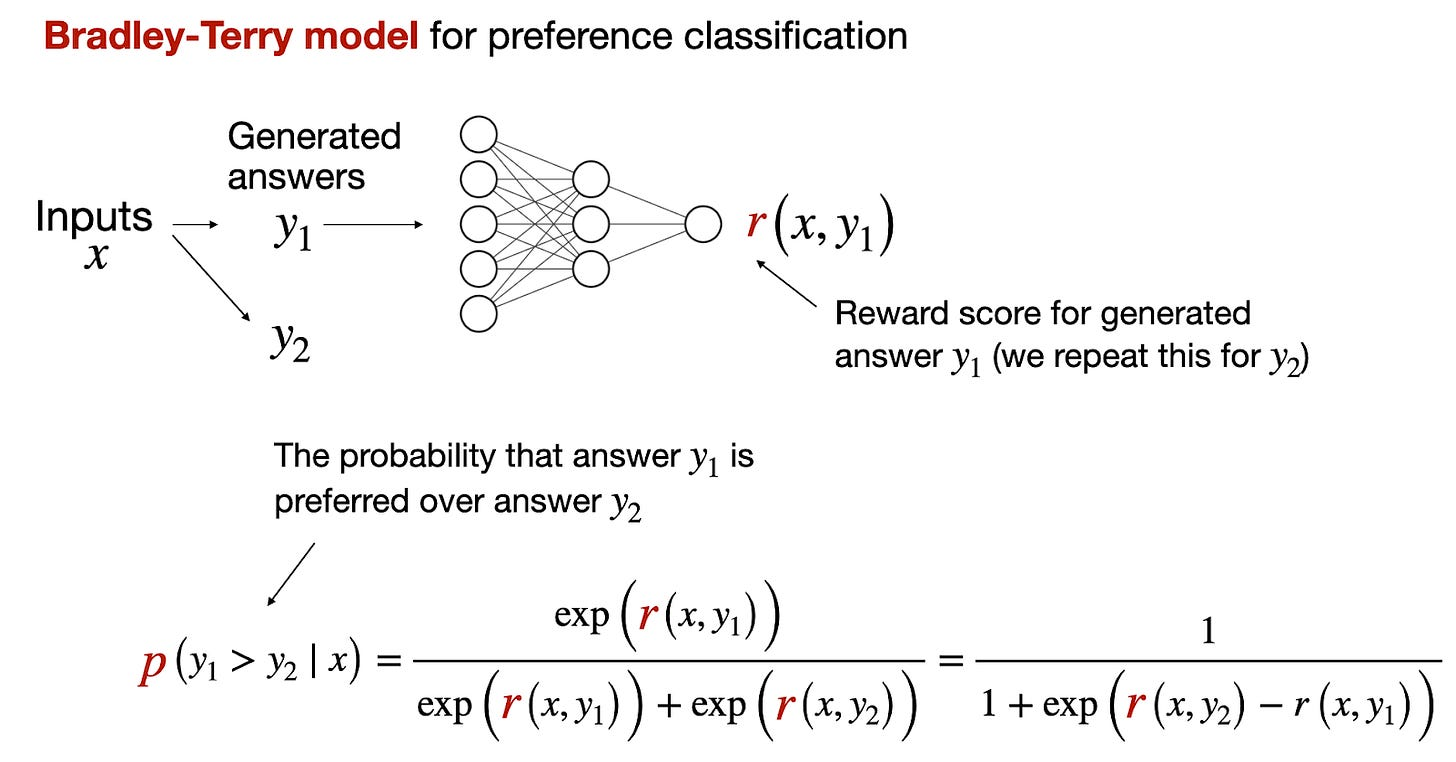

Preference Classification

preference classification

- image source: Sebastian Raschka

Loss Function

- \(s_{w} = r_{\theta}(x, y_{w})\): reward for winning response

- \(s_{\ell} = r_{\theta}(x, y_{\ell})\): reward for losing response

- Goal: minimize expected loss

\[-\text{E}_{x}\text{log}(\sigma(s_{w} - s_{\ell}))\]

That is, reward model should not have \(s_{w} << s_{\ell}\)

Reinforcement Learning with Human Feedback

RLHF

RLHF workflow

- image source: Hugging Face

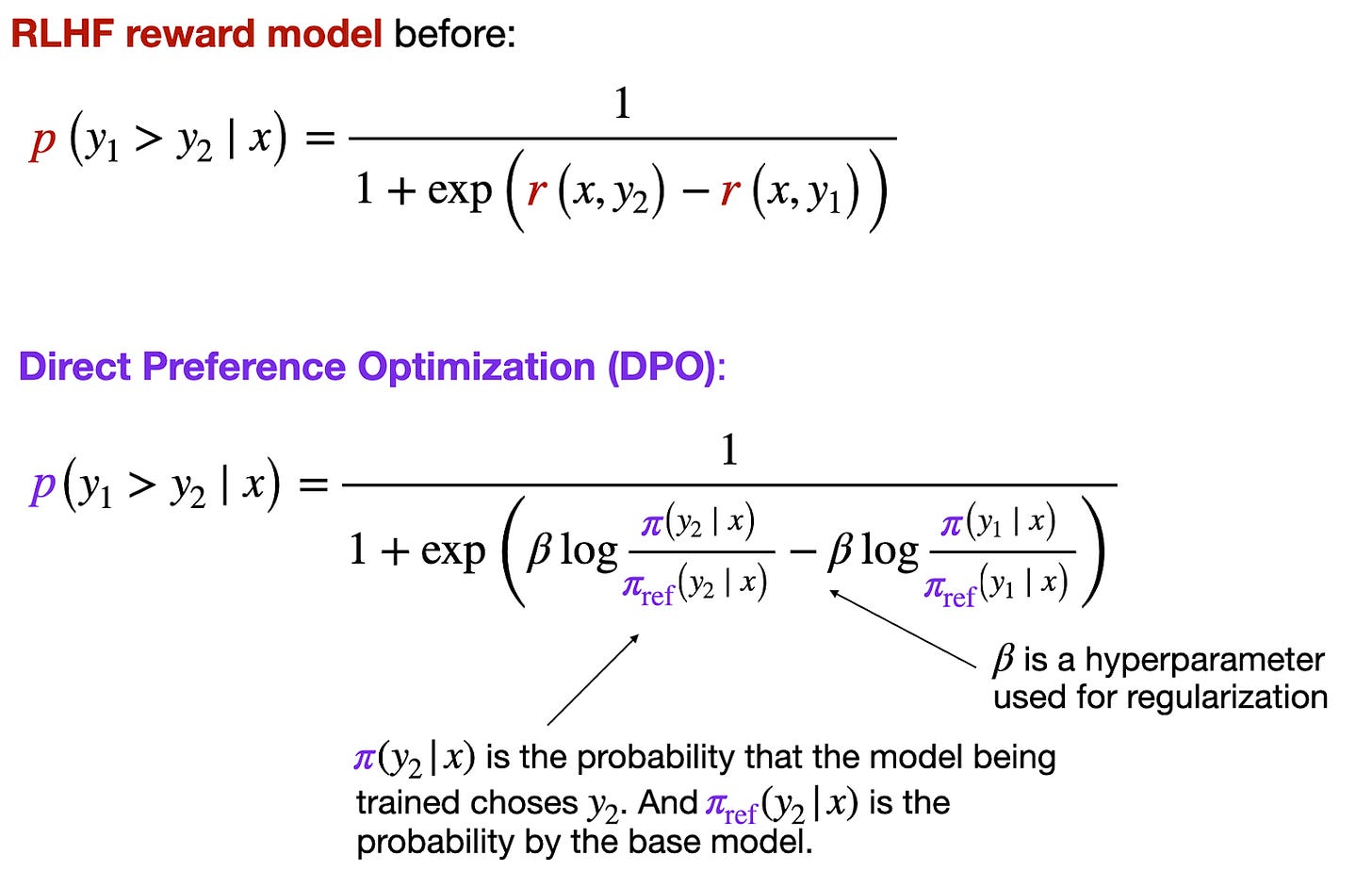

Toward DPO

toward DPO

- image source: Sebastian Raschka

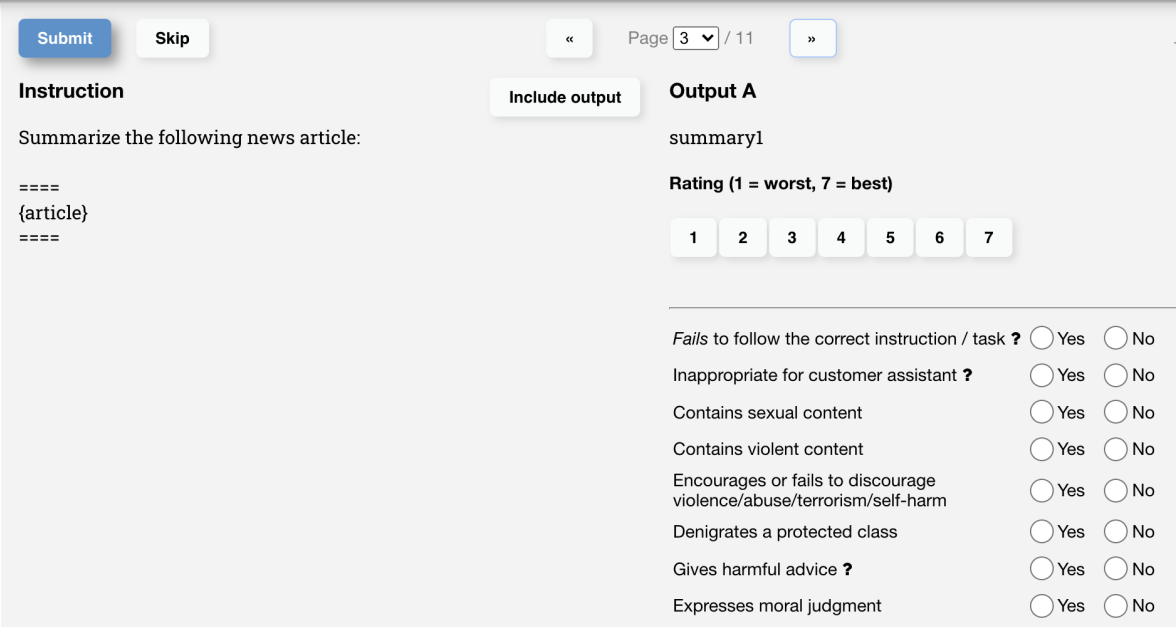

Human Feedback (moderation)

human feedback for moderation

- image source: Chip Huyen by Chip Huyen (author)

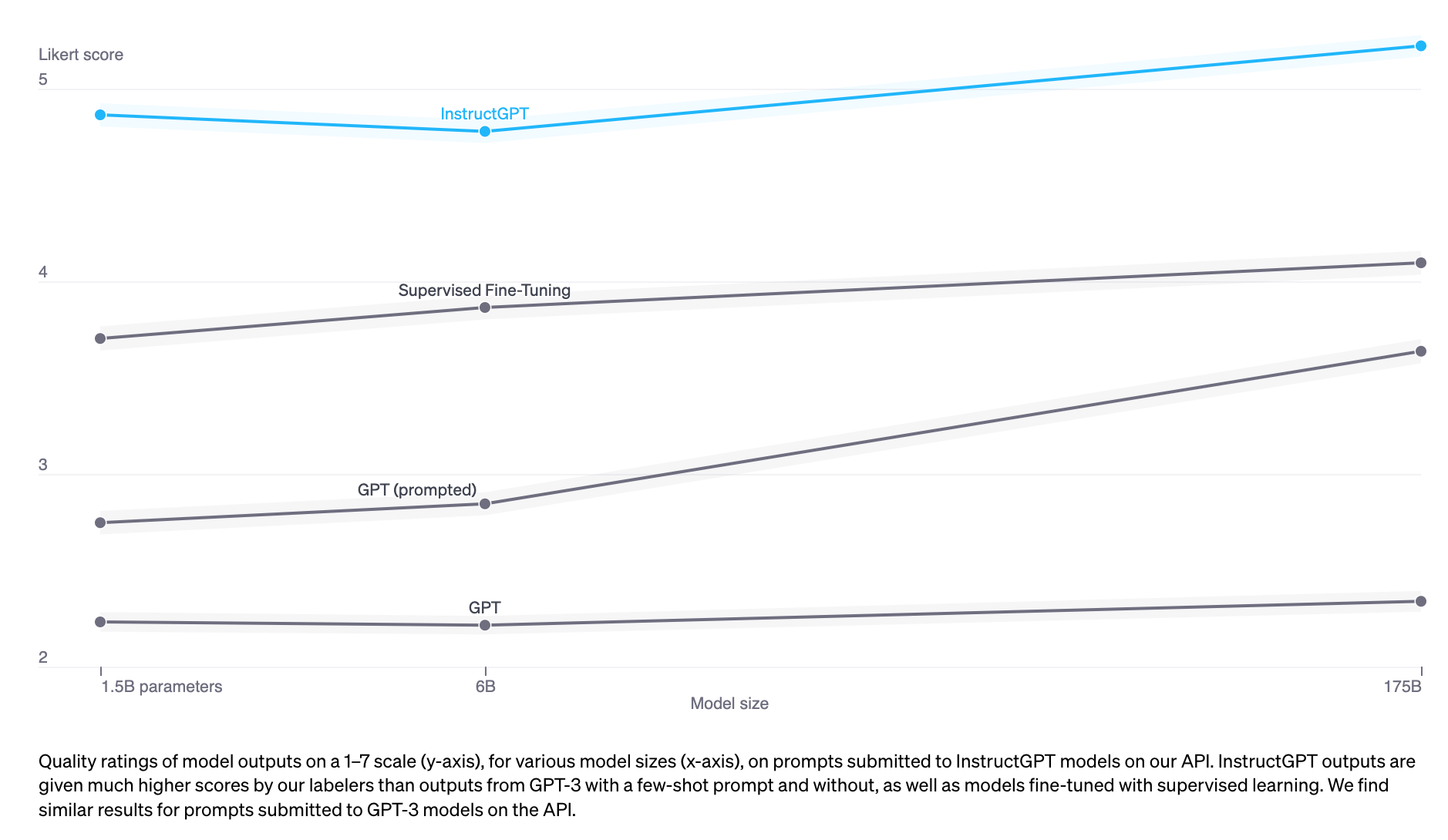

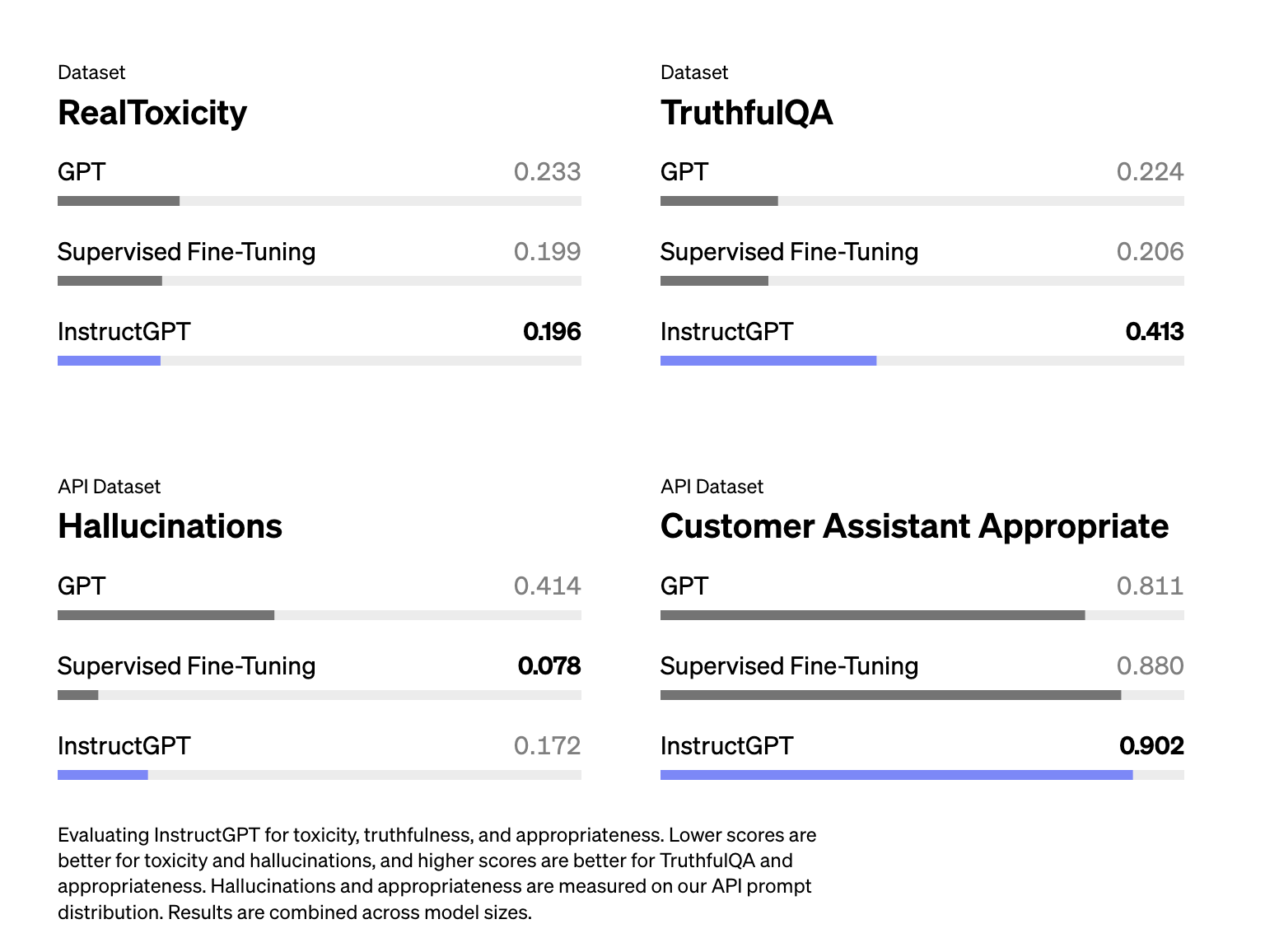

Results

Quality of Model Outputs

Bai et al., 2022

Selling Points

Schulman, 2023