14. Positional Encoding

Bryan Tegomoh

2025-03-30

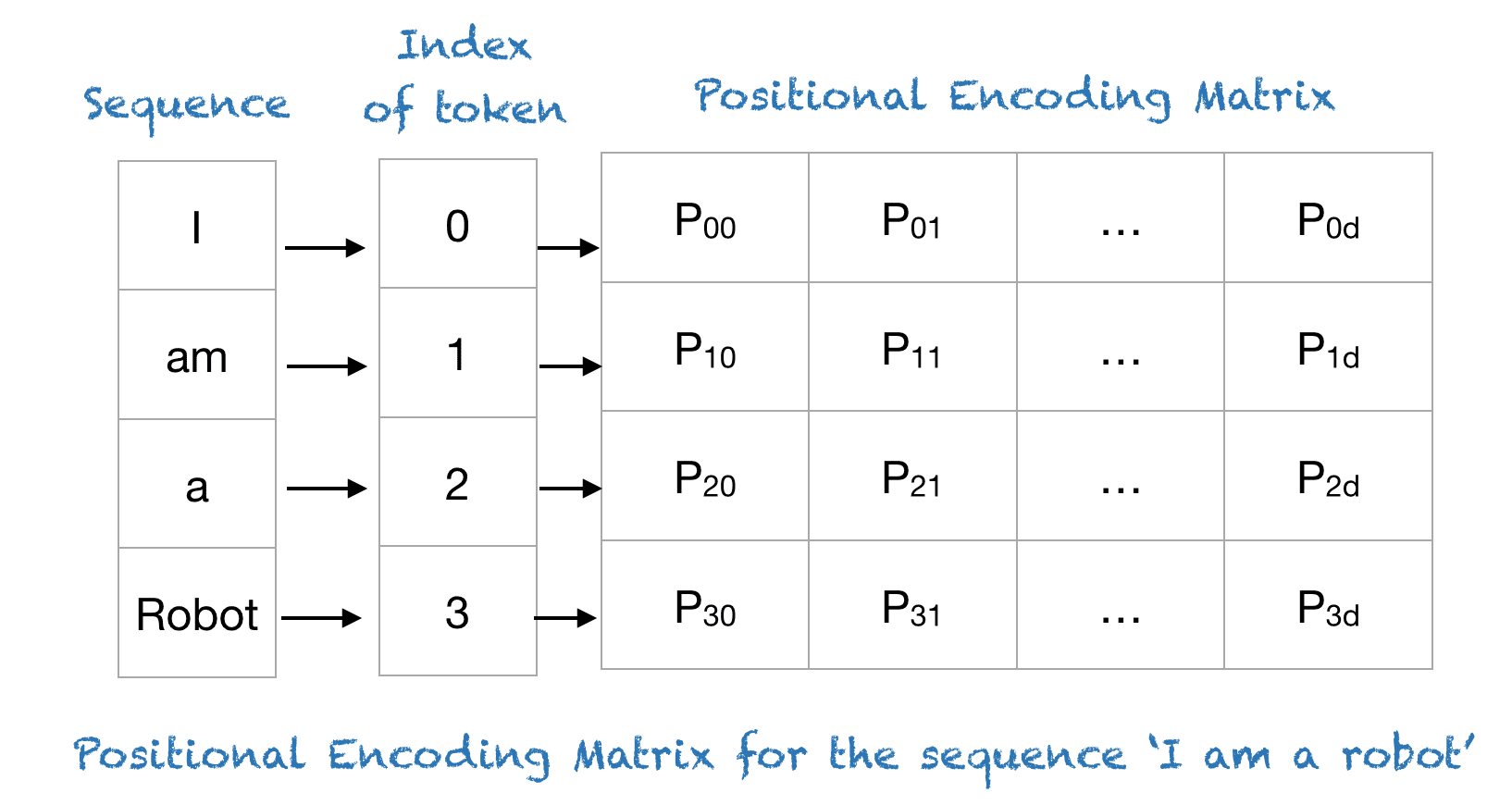

Chapter 14: Positional Encoding

Why Positional Encoding?

Transformers lack recurrence (unlike RNNs)

No inherent sense of word order

Solution: Add positional info to token embeddings

![]()

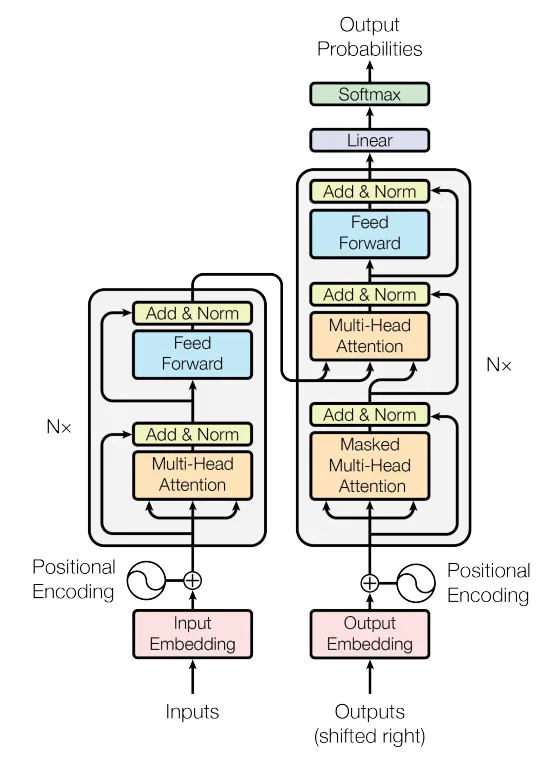

The Power of Positional Encoding

Transformer Architecture

Naive Approaches

- One-hot encoding: Unique vector per position

- Issue: Doesn’t scale, no ordinality

- Linear indices: 1, 2, 3, …

- Issue: Large values, poor generalization

- Need: Unique, bounded, relative-aware encoding

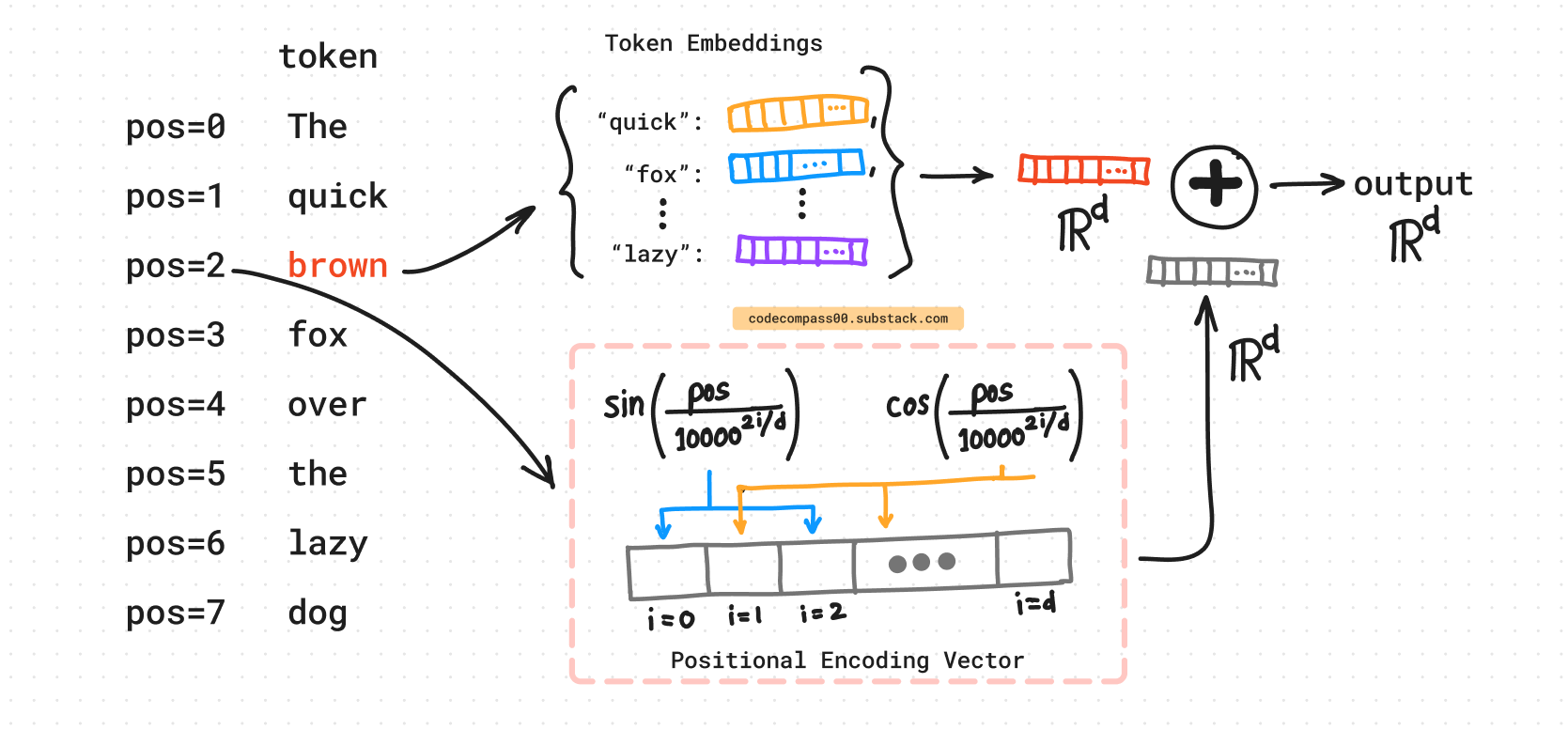

Sinusoidal Positional Encoding

- Proposed in “Attention is All You Need” Vaswani et al.

- For position ( k ), dimension ( d ):

- ( P(k, 2i) = (k / 10000^{2i/d}) )

- ( P(k, 2i+1) = (k / 10000^{2i/d}) )

- Vector per position, added to token embedding

How It Works

- Sine/cosine pairs at varying frequencies

- Wavelengths: ( 2) to ( 2 )

- Properties:

- Unique per position

- Consistent distances

- Generalizes to longer sequences

Visualization

- Imagine a 512-dim encoding for 100-token sequence

- See Mehreen Saeed’s blog for a plot

- Each row = position, each column = dimension

- Gradual frequency shift encodes order

Modern Twist: RoPE

- Rotary Positional Encoding (RoPE):

- Rotates embeddings based on position

- More stable, faster to learn

- Widely used in frontier LLMs (e.g., LLaMA)

- Check Eleuther AI’s blog for details