13. Tokenization

Bryan Tegomoh

2025-03-30

Welcome to the Lecture

Section III: Foundations for Modern Language Modeling

Focus:

Chapter 13: Tokenization

Chapter 14: Positional Encoding

Goal: Understand key concepts for training modern LLMs

Tokenization

“Tokenization is at the heart of much weirdness of LLMs” - Karpathy

- Why can’t LLM spell words? Tokenization.

- Why can’t LLM do super simple string processing tasks? Tokenization.

- Why is LLM worse at non-English languages (e.g. Japanese)? Tokenization.

- Why is LLM bad at simple arithmetic? Tokenization.

- Why did my LLM abruptly halt when it sees the string “</endoftext|>”? Tokenization.

- Why is LLM not actually end-to-end language modeling? Tokenization.

- What is the real root of suffering? Tokenization.

Quick Recap: What Came Before (1/2)

- Introduction:

- The AI Landscape

- The Content Landscape

- Preliminaries : Math (Calculus, Linear algebra), Programming (Python basics)

- Section I: Foundations of Sequential Prediction

- Statistical Prediction & Supervised Learning

- Time-Series Analysis

- Online Learning and Regret Minimization

- Reinforcement Learning

- Markov Models

Quick Recap: What Came Before (2/2)

- Section II: Neural Sequential Prediction

- Statistical Prediction with Neural Networks

- Recurrent Neural Networks (RNNs)

- LSTMs and GRUs (Long Short-Term Memory and Gated Recurrent Unit) networks

- Embeddings and Topic Modeling

- Encoders and Decoders

- Decoder-Only Transformers

Why This Matters

- Builds from decoder-only Transformers to Modern LLM Foundations

- Key questions:

- How do we process text efficiently?

- How do we encode word order without recurrence?

- Rapid AI progress (Where we were in 2022 → today)

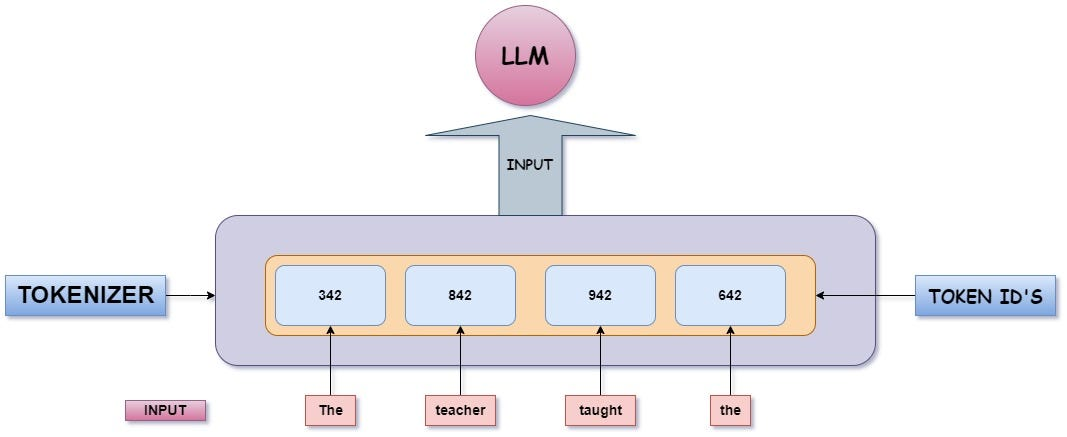

What is Tokenization?

- Converting text into manageable units (tokens) for LLMs

- Why it’s critical:

- LLMs process tokens, not raw characters or full words

- Impacts efficiency and generalization

![]()

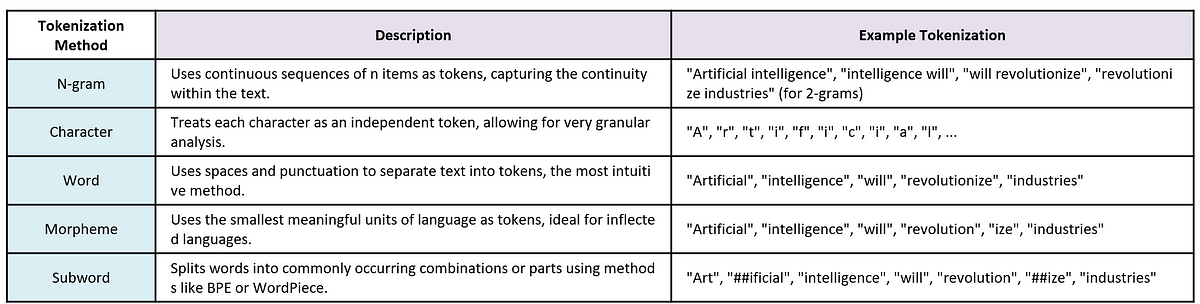

Tokenization Approaches

- Character-level: Each char = token

- Pros: Simple, handles all inputs

- Cons: Inefficient (long sequences)

- Word-level: Each word = token

Pros: Intuitive, shorter sequences

Cons: Fixed vocab → unseen words/misspellings

![]()

Subword-Level Tokenization

Solution: Split words into subword units.

Example: “playing” → “play” + “##ing”

Algorithm: Byte-Pair Encoding (BPE)

Starts with character-level tokens

Iteratively merges frequent pairs

Analogy: Huffman coding (but dynamic)

Result: Compact, adaptive vocabulary

See Andrej Karpathy’s video for intuition

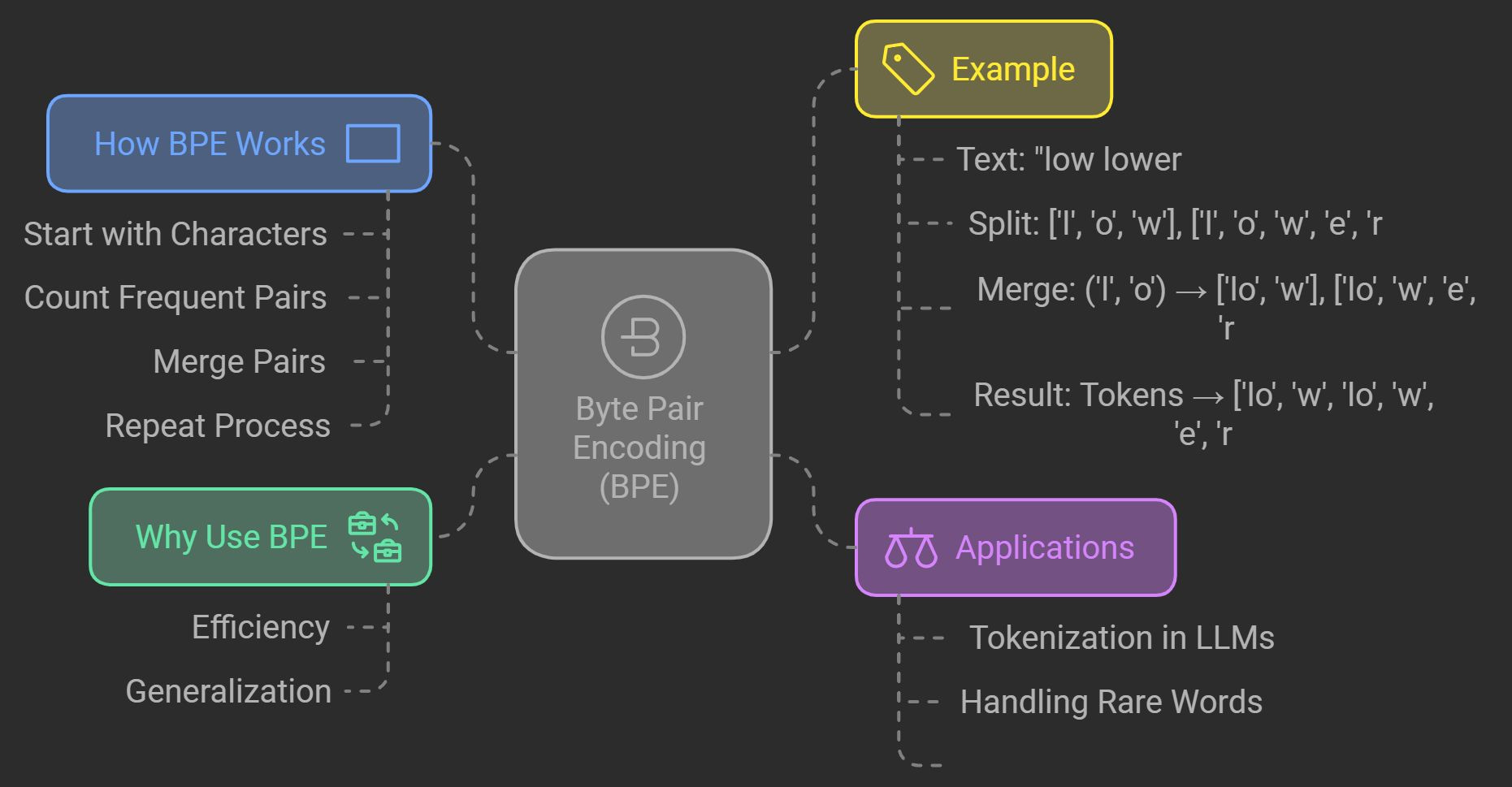

Byte-Pair Encoding (BPE)

BPE Example

- Text: “low lower lowest”

- Initial:

l o w,l o w e r,l o w e s t - Merge

lo→low - Merge

low+e→lowe - Final vocab:

low,lowe,r,s,t

Why Subword Tokenization?

- Covers unseen words (e.g., “lowering” → “lowe” + “r” + “##ing”)

- Reduces sequence length vs. character-level

- Used in most modern LLMs (e.g., GPT, BERT)