Choosing the Right Server for You

Learning objectives

- Appreciate computation hardware/software

- CPU vs. GPU

- RAM vs Storage

What is Computing?

Everything on a computer is represented by a (usually very large) number.

At a hardware level the only thing computers do is add these numbers together.

Modern computers add very quickly and very accurately.

Computing speed is limited by cores and clock

classroom as a computer

CPU: The physical brain

Cores: Available processing centers in a CPU (usually 4-32)

Clock speed: number of operations each core can accomplish (2-5 GHz, 2-5 billion operations per sec)

Most recent improvement is a result of number of cores, and core usage, not clocks

moore’s law

How do I go faster…?

For R/Python, fewer faster cores are usually preferable to many slower cores

For servers, keep it light:

\(n \textrm{ cores} = 1 \textrm{ core per user} + 1\)

GPU

Specialized chips initially built for graphics

More numerous slower cores than CPU

Where a consumer-grade CPU has 4-16 cores, mid-range GPUs have 700-4,000 cores, but each one runs between 1% and 10% the speed of a CPU core.

- Great for parallelisation (think Neural Nets)

So Should I Get GPU…?

Usually, no (parallelising into < 10 workers is often enough)

You’ll know you need a GPU when you get there

Discuss…

decisions, decisions

RAM

Basically short term memory. When a process ends, it is destroyed (like when you quit RStudio)

Get a computer with lots of RAM, probably

\(\textrm{Necessary RAM} = \textrm{max amount of data} \times 3\)

- If it’s too big, move to disk (e.g. database, Arrow, dask, HDF5)

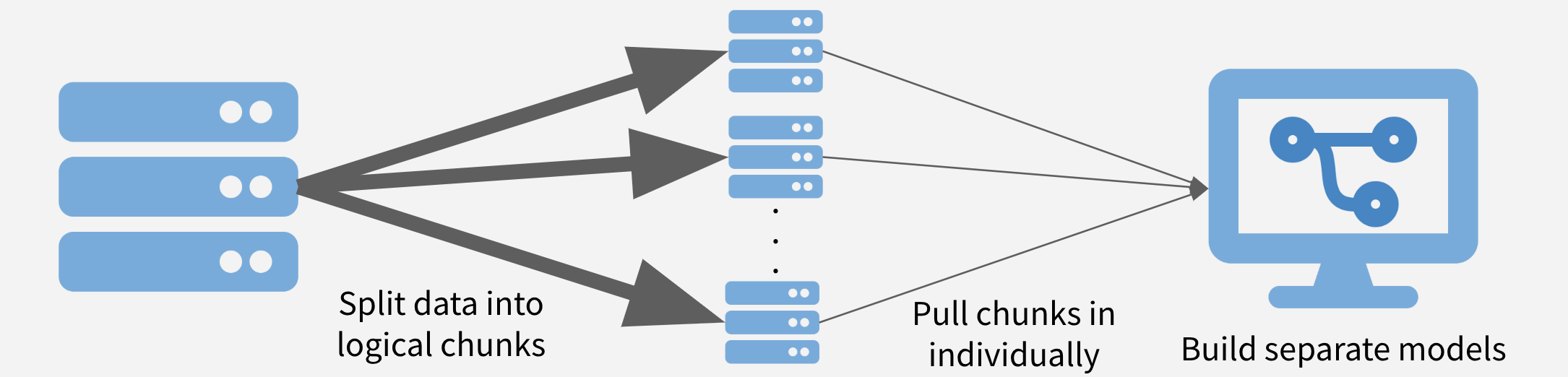

Big data in R

Disk (Storage)

Where physical files live and persist

The bigger the better (and cheaper nowadays)

\(\textrm{Necessary Storage} = \textrm{approx data} + 1 \textrm{GB} \times n \textrm{ users}\)

Scenarios

You try to load a big csv file into pandas in Python. It churns for a while and then crashes.

You go to build a new ML model on your data. You’d like to re-train the model once a day, but it turns out training this model takes 26 hours on your laptop.

You design an visualization Matplotlib , and create a whole bunch in a loop, you want to parallelize the operation. Right now you’re running on a t2.small with 1 CPU.

Lab

Not much to say here…🤷