- 1

- Declare the base image

- 2

-

Copy

data.csvfrom the host’s working directory to the container’s data directory - 3

-

Print the first few rows of

data.csv

Demystyfing Docker

Learning objectives

- Decide whether a container is the right tool for a given job.

- Download and run pre-built Docker images.

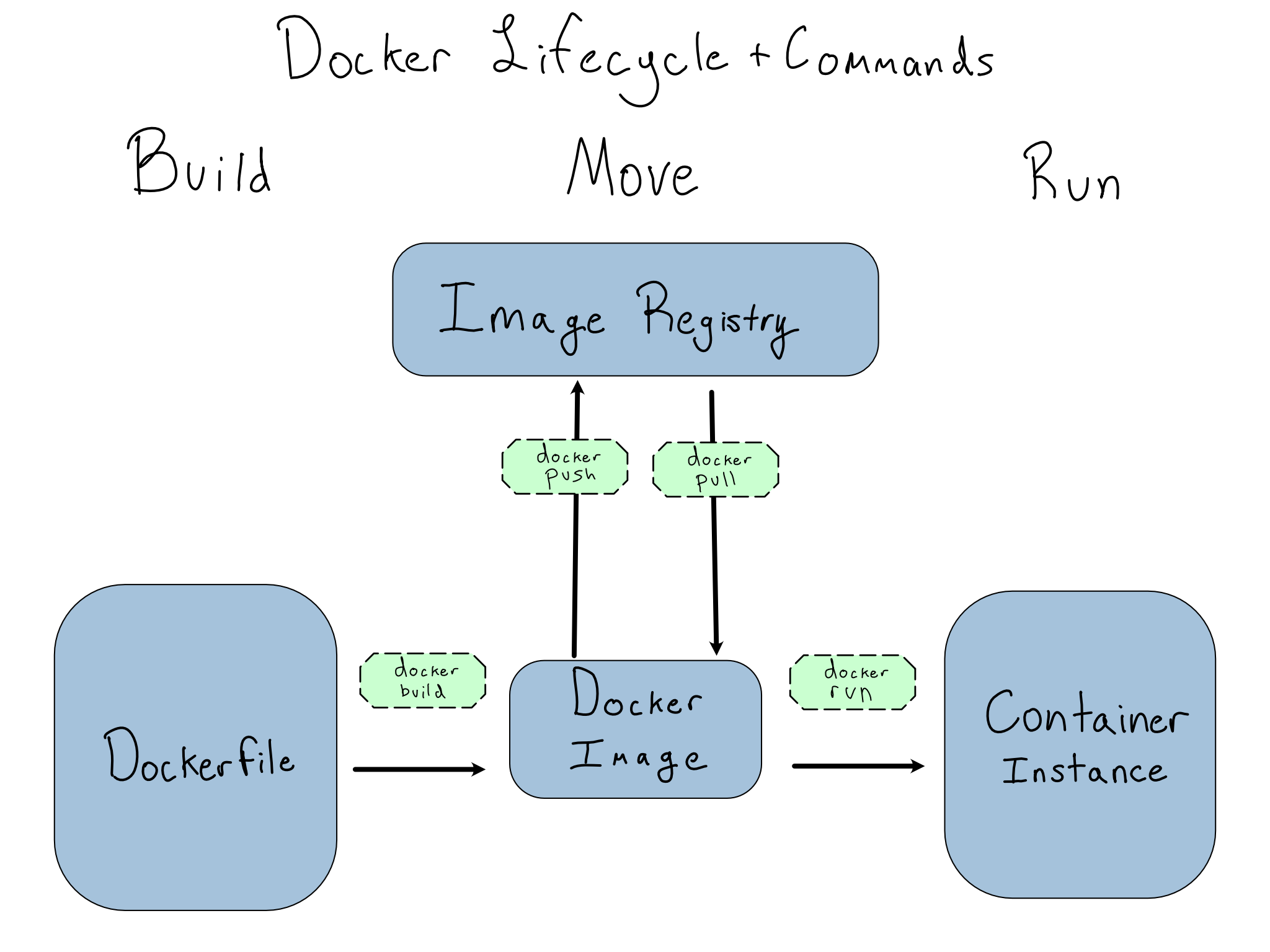

- Describe the stages of the Docker container lifecycle.

- Build simple Dockerfiles for your own projects.

Why docker matters for data science

Docker creates standardized environments that are:

Reproducible

Portable

Collaborative

Scalable

What is Docker?

- An open-source tool for building, sharing, and running software

Specify your environment via a Dockerfile

Dockerfiles build Docker images

Dockerfiles are plain text files using

FROM,RUN,COPY, andCMDcommands

Docker images are a snapshot of your environment

Docker images contain the bundled software (e.g. OS, data, packages)

Docker images can be shared with others via Docker hub

Docker images can, in theory, be a standalone project

Containers are an ephemeral instance of a Docker Image

By default, changes made to containers are lost on shutdown

Data can be preserved from instance to instance of the same container using mounted volumes

Containers are a process that executes the layers of your Dockerfile